Elena Canorea

Communications Lead

Intro

2.Intro

La llegada de la IA generativa ha abierto un nuevo paradigma en las empresas. Sus numerosos casos de uso, las posibilidades de mejora de procesos y mayor productividad o empoderamiento de los empleados han hecho que muchas compañías estén implementando esta tecnología, pero la gran mayoría no lo está haciendo de forma adecuada.

Como cualquier tecnología en evolución, los beneficios que aporta pueden verse afectados por las brechas de seguridad que puede abrir si no se implementa de manera segura y consciente. Purview representa un papel fundamental a la hora de permitir el desarrollo y la implementación de IA responsable en Copilot. Te explicamos cómo.

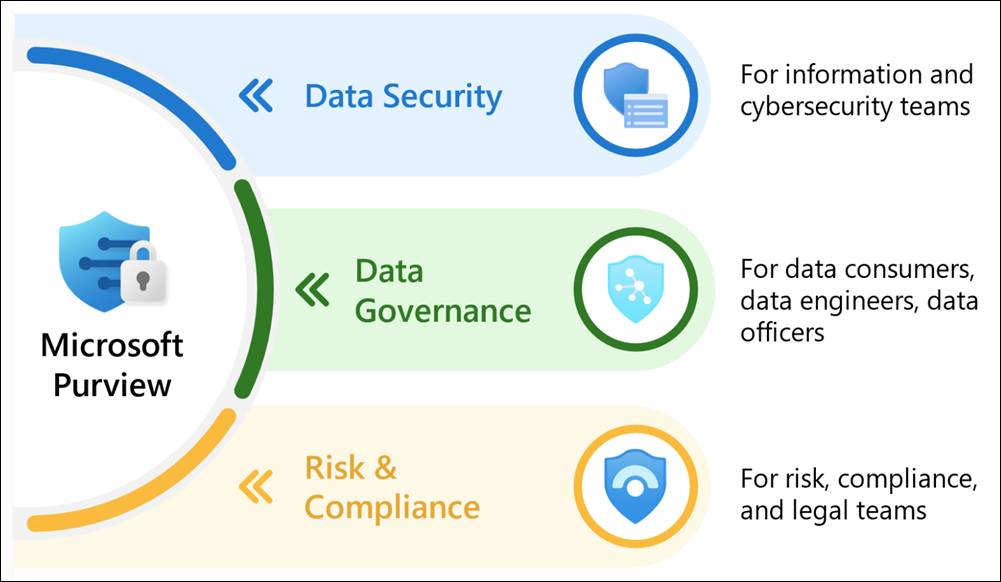

Purview se presenta como una solución integral de información sobre el patrimonio de datos, la cual ayuda en la protección y gobernanza de los datos. Nace con el objetivo de ofrecer capacidades integrales que ayuden a las empresas a detectar, proteger y administrar la información dondequiera que se encuentre.

Ofrece capacidades para catalogar, mapear y monitorear datos confidenciales en todo el panorama de datos de la organización. Esto hace que los profesionales tengan mayor visibilidad y control para evaluar y mitigar los posibles riesgos éticos de la IA al crear y lanzar aplicaciones impulsadas por Copilot.

Microsoft Purview permite administrar y proteger tus datos a través de ventajas como:

El catálogo de datos de Purview traza dónde se encuentran los datos personales identificables, la información financiera, los datos sanitarios y otros datos confidenciales en entornos locales, multicloud y SaaS. Este inventario permite identificar conjuntos de datos que podrían generar sesgos, problemas de imparcialidad o confidencialidad si se utilizan para entrenar modelos de IA.

Además, aplica etiquetas de confidencialidad para etiquetar y categorizar correctamente los datos confidenciales identificados, lo que ayuda al tratamiento adecuado de los conjuntos de datos al desarrollar aplicaciones de Copilot, además de garantizar que se anonimicen o sinteticen si es necesario.

Por otro lado, la función de linaje de datos de Purview brinda visibilidad de los flujos de datos ascendentes desde la fuente hasta el consumo. Esta muestra cómo se interconectan y se utilizan las distintas fuentes de datos en una organización. Combinado con el catálogo, brindan a los equipos de desarrollo una visibilidad completa de los datos antes de lanzar aplicaciones habilitadas para Copilot.

De hecho, en producción, las capacidades de escaneo y monitoreo continuo de Purview mantienen bajo control el patrimonio de datos de IA. Cualquier dato confidencial nuevo que aparezca se marca inmediatamente mediante una clasificación y etiquetado automatizados. Y también cuenta con clasificadores entrenables, lo que permite la identificación personalizada de tipos de datos confidenciales más allá de los patrones predeterminados.

Al usar archivos de muestra para entrenar el modelo, se puede detectar rápidamente datos específicos de la organización, como códigos de productos, identificaciones de clientes o contenido exclusivo para garantizar una gobernanza integral de los datos en fuentes de datos estructuradas, no estructuradas y personalizadas. El escaneo puede activar notificaciones a los propietarios de datos si se detectan datos no deseados en el entrenamiento, lo que permite una rápida remediación para mantener la ética y el cumplimiento de la IA.

El control de acceso y la gobernanza de datos se vuelven aún más críticos a medida que se generaliza en uso de Copilot u otras herramientas de IA. Sin embargo, con la llegada de Purview, se puede abordar el riesgo gracias a:

Microsoft 365 Copilot usa controles existentes para asegurarse de que los datos almacenados en el inquilino nunca se devuelven al usuario ni los usa un modelo de lenguaje grande (LLM) si el usuario no tiene acceso a esos datos. Si los datos tienen etiquetas de confidencialidad de su organización aplicadas al contenido, hay una capa adicional de protección cuando:

Por otro lado, cuando se usa Microsoft 365 Copilot para crear contenido nuevo basado en un elemento que tiene aplicada una etiqueta de confidencialidad, la etiqueta de confidencialidad del archivo de origen se hereda automáticamente, con la configuración de protección de la etiqueta.

Si se usan varios archivos para crear contenido nuevo, la etiqueta de confidencialidad con la prioridad más alta se usa para la herencia de etiquetas. Al igual que con todos los escenarios de etiquetado automático, el usuario siempre puede invalidar y reemplazar una etiqueta heredada (o quitarla, si no usa el etiquetado obligatorio).

Se pueden usar las funcionalidades de cumplimiento de Purview con la protección de datos empresariales para admitir los requisitos de riesgo y cumplimiento de Microsoft 365 Copilot y Microsoft Copilot:

Para el cumplimiento de las comunicaciones, puedes analizar las solicitudes del usuario y las respuestas de Copilot para detectar interacciones inapropiadas o de riesgo o el uso compartido de información confidencial.

Para la auditoría, los detalles se capturan en el registro de auditoría unificado cuando los usuarios interactúan con Copilot. Los eventos incluyen cómo y cuándo interactúan los usuarios con Copilot, en el que se produjo el servicio de Microsoft 365, y las referencias a los archivos almacenados en Microsoft 365 a los que se accedió durante la interacción. Si estos archivos tienen una etiqueta de confidencialidad aplicada, también se captura.

Para la búsqueda de contenido, dado que el usuario solicita a Copilot y las respuestas de Copilot se almacenan en el buzón de un usuario, se pueden buscar y recuperar cuando se selecciona el buzón del usuario como origen de una consulta de búsqueda.

De forma similar para eDiscovery, se usa el mismo proceso de consulta para seleccionar buzones y recuperar las solicitudes de usuario a Copilot y las respuestas de Copilot. Una vez creada la colección y originada en la fase de revisión de eDiscovery (Premium), estos datos están disponibles para realizar todas las acciones de revisión existentes. Estas colecciones y conjuntos de revisión se pueden poner en espera o exportar.

En el caso de las directivas de retención que admiten la retención y eliminación automáticas, los mensajes de usuario y las respuestas de Copilot se identifican mediante los chats de Teams de ubicación y las interacciones de Copilot. Las directivas de retención existentes configuradas anteriormente para los chats de Teams ahora incluyen automáticamente mensajes y respuestas del usuario hacia y desde Microsoft 365 Copilot y Microsoft Copilot.

Al igual que con todas las directivas de retención y retenciones, si se aplica más de una directiva para la misma ubicación a un usuario, los principios de retención resuelven los conflictos.

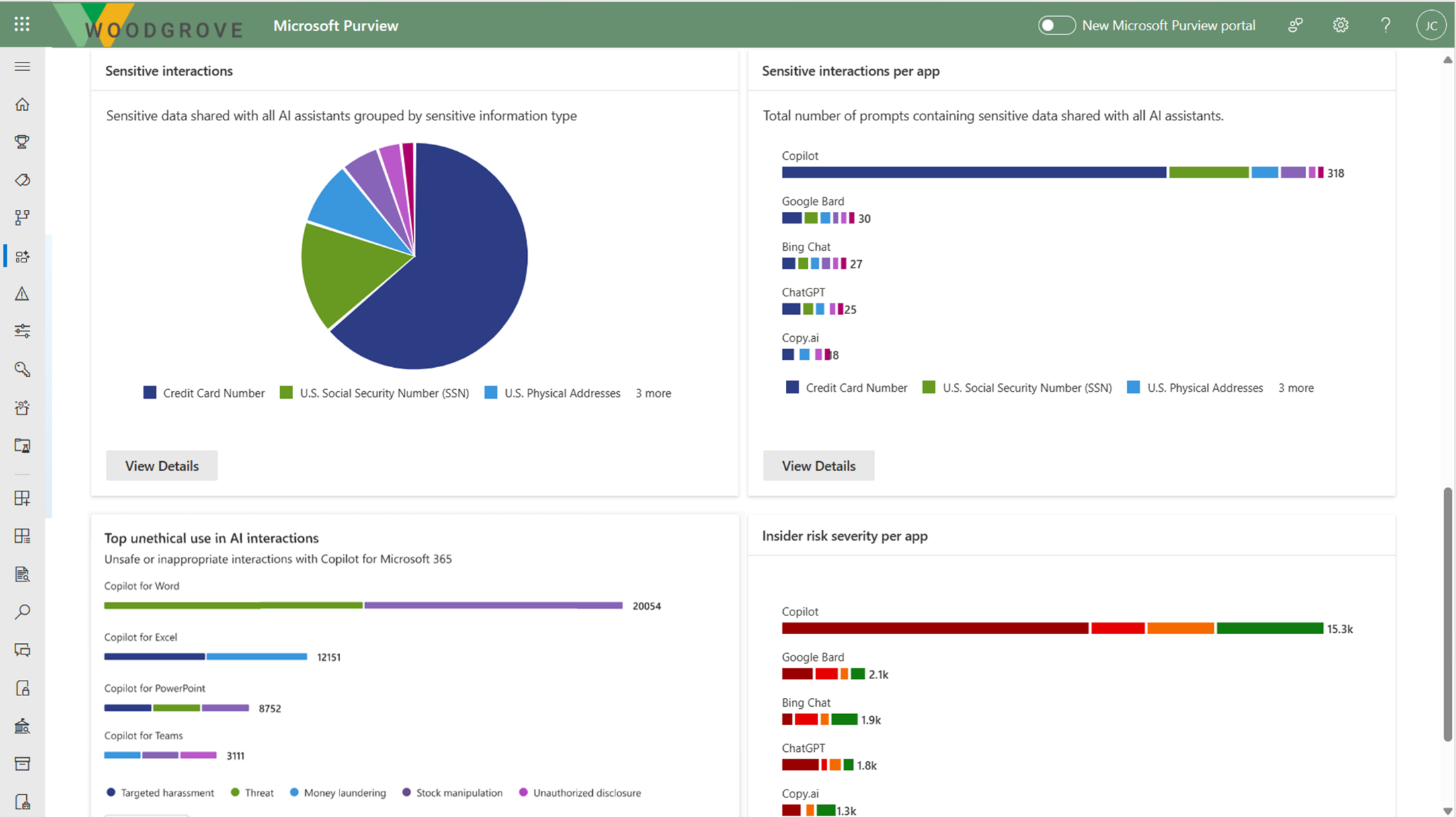

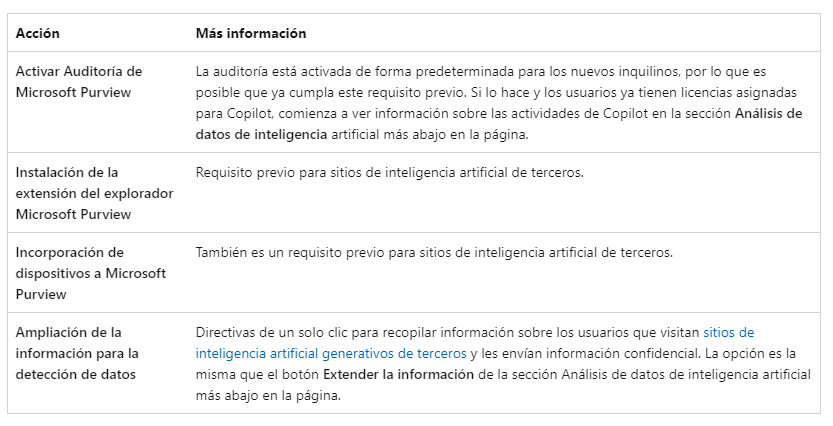

El Microsoft Purview Centro de IA se encuentra en versión preliminar, y proporciona herramientas gráficas e informes fáciles de usar para obtener rápidamente información sobre el uso de la IA dentro de tu organización. Las directivas de un solo clic te ayudarán a proteger los datos y a cumplir con los requisitos normativos.

Podrás usar en Centro de IA junto con otras funcionalidades de Purview para reforzar la seguridad y el cumplimiento de datos para Microsoft 365 Copilot y Microsoft Copilot:

Este centro de IA proporciona una ubicación de administración central que te ayuda a proteger rápidamente los datos de las aplicaciones de IA y a supervisar de forma proactiva el uso de esta tecnología. Puedes obtener más información en este vídeo explicativo.

También ofrece un conjunto de funcionalidades para que puedas adoptar la IA de forma segura, sin tener que elegir entre productividad y protección:

Para ayudarte a obtener información más rápida, el centro de IA proporciona algunas directivas preconfiguradas que se pueden activar con un solo clic. Solo tendrás que esperar 24 horas para que estas nuevas directivas recopilen datos para mostrar los resultados en el centro o reflejar los cambios que se realicen en la configuración predeterminada.

Para comenzar a usarlo, puede usar el portal de Microsoft Purview o el de cumplimiento y contar con los permisos adecuados para la administración de cumplimiento.

Gracias a su visibilidad de datos confidenciales de extremo a extremo, sus conocimientos automatizados y su aplicación de políticas, Purview es indispensable para el uso ético y seguro de Copilot. Gracias a sus capacidades para reunir en un mismo panel Defender, Sentinel, Intune y Entra, permite a los profesionales evaluar los riesgos de la IA de forma temprana, diseñar controles adecuados y mantener una supervisión responsable después de su implementación.

Por todo ello, Purview te ayudará a garantizar que Copilot respete tus valores organizacionales, las regulaciones y las mejores prácticas éticas de la IA. Esto, a su vez se verá reflejado en una mayor confianza con los clientes, así como transparencias y gobernanza del patrimonio de datos necesarias para una innovación ética y conforme a las normas.

El equipo de seguridad de Plain Concepts está preparado para ayudarte a implantar Microsoft Purview en tu estrategia de seguridad empresarial, cubriendo puntos como la protección de la información, el gobierno de datos unificado, la administración del ciclo de vida inteligente, la administración de riesgos internos, la auditoría, la administración del cumplimiento o la Normativa NIS2. ¡No esperes más y contacta con nuestros expertos y transforma tu forma de trabajar de forma segura!

Además, para que comiences a familiarizarte con el uso de Purview y Copilot, te dejamos por aquí el webinar “Microsoft Purview: Exprime Copilot con la máxima seguridad”, una oportunidad única para conocer cómo esta poderosa combinación no solo eleva nuestra productividad, sino que también fortalece nuestro compromiso con la seguridad y el cumplimiento normativo.

Elena Canorea

Communications Lead