The Evergine 2025 release is here!

We are thrilled to announce a new major milestone for Evergine. Our latest release introduces a wide array of improvements and new features, performance enhancements, and new tools designed to empower developers across industries.

At Plain Concepts, we’ve carefully developed Evergine as the graphics engine that drives our internal projects. For over a decade, our licensing model has been built on a foundation of accessibility, ensuring that all users can benefit from its capabilities:

- Evergine is completely free for commercial use.

- No registration is required.

- We do not monitor your usage or activity (no telemetry).

- The license is valid for all industries.

What’s new?

For this new release, we have focused on delivering native support for key file formats used in various industries, such as STL and DICOM in healthcare. We have also added more built-in runtime loaders for images and videos.

Additionally, we remain committed to staying up to date with the latest cutting-edge technologies used in industries like Energy and Construction. This includes support for reality capture new technologies such as Gaussian Splatting, making these innovations more accessible to many companies.

Finally, we have introduced new features based on feedback from our customers working on serious industrial projects. These include updates to Web templates, a new modular shader design, the Evergine.Mocks library for unit testing and enhanced AI tools in Evergine Studio.

Download Evergine 2025 today and start creating industrial real-time experiences!

Major Highlights

- New Gaussian Splatting Add-on

- New DICOM Add-on

- New Evergine Runtimes

- Subsurface Scattering Support

- AI assets generation improvements

- Modularize Standard Effect

- New Evergine Mocks library

- Web development improvements

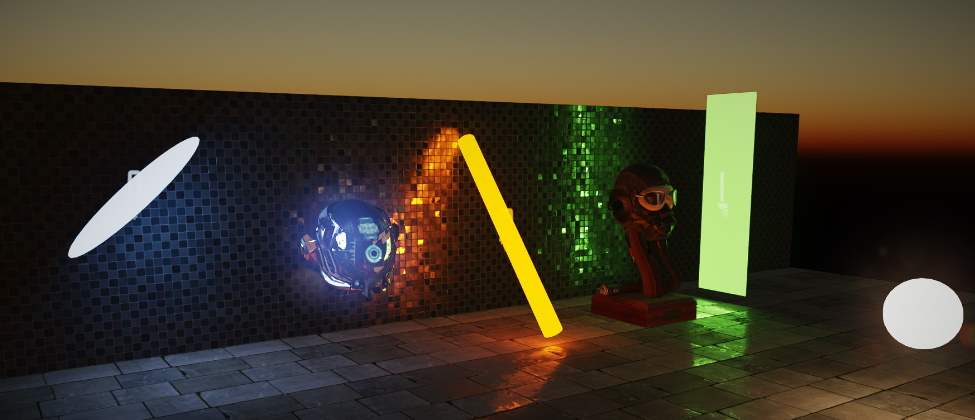

New Gaussian Splatting Add-on

In recent years, new techniques have emerged, significantly improving visual quality in reality capture scenarios. Neural Radiance Fields (NeRF), for example, have revolutionized scene capture using multiple photos or videos. We have been closely following these technological advancements, and in preview releases, we created a sample based on InstantNGP from Nvidia.

However, achieving high visual quality with these methods often requires neural networks that are computationally expensive to render.

To enable real-time rendering, a new approach called Gaussian Splatting has been introduced. This technique represents a 3D scene using a collection of Gaussian primitives, allowing for fast, high-quality rendering without requiring expensive neural network inference.

Over the past few months, we have been developing a new add-on that allows users to easily create Gaussian Splatting viewers for both desktop and web platforms. We are excited to announce that it is now publicly available!

Additionally, we have updated our previous sample based on InstantNGP to a new version that leverages Gaussian Splatting technology.

To explore this topic in-depth, visit: Gaussian Splatting Add-on in Evergine

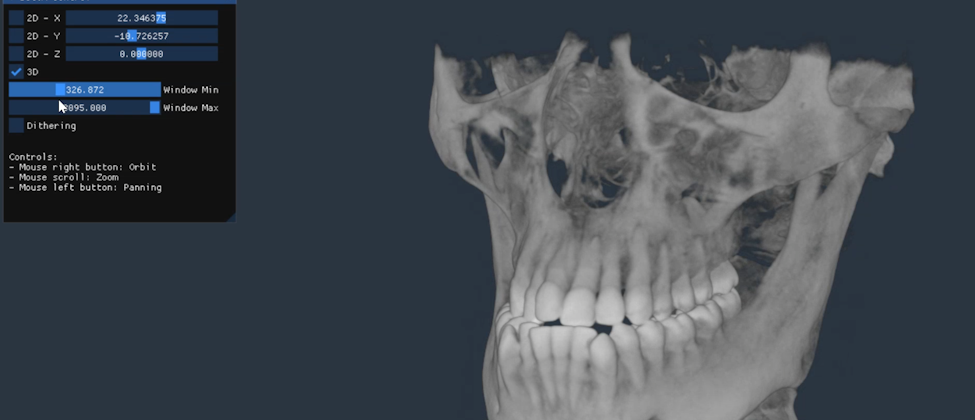

New DICOM Add-on

Some of our healthcare customers are developing medical applications. In this industry, one of the most widely used file formats is DICOM (Digital Imaging and Communications in Medicine), which is commonly used to store and transmit medical images such as X-rays, CT scans, MRIs, and ultrasound images.

To support this, we decided to create a built-in addon that simplifies the development of DICOM viewers, making it easier to load and render medical images. This addon is fully supported on the web platform., allowing developers to create web-based medical imaging applications without additional complexity.

Since DICOM images can contain volumetric data, a specialized rendering pipeline is required. This pipeline utilizes ray tracing techniques to accurately visualize the 3D structure of medical scans.

Additionally, we have developed a new Evergine sample showcasing how to build a DICOM viewer using this new major release.

For more information, visit: DICOM Add-On

New Evergine Runtimes

Last year, we introduced new built-in libraries in Evergine to enable asset loading at runtime. This allows users to load assets directly once the application has started, without the need to convert them to a specific engine format. As a result, loading and startup times have been reduced for both desktop and web applications. Web applications, in particular, benefit significantly, as they no longer need to wait for all content files to load before starting.

We have already published Evergine.Runtime.GLB and Evergine.Runtime.STL, and with this release, we are introducing two new runtime libraries:

Evergine.Runtimes.Images

Evergine.Runtimes.Videos

These new runtimes allow for the loading of images and videos at runtime. For more details on performance and supported platforms, continue reading at: New Images and Videos runtimes loaders

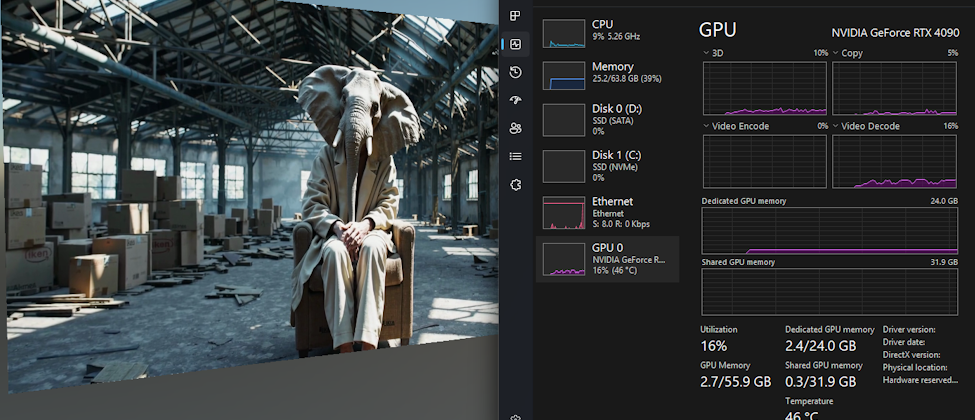

Subsurface Scattering Support

With the growing hype around AI conversational agents, many customers are now working on real-time 3D conversational avatars. To enhance their realism, we have introduced the subsurface scattering technique in the default rendering pipeline.

Subsurface Scattering (SSS) refers to the phenomenon where light scatters as it passes through a translucent surface. This effect is particularly noticeable in thinner parts of a model, such as human ears and noses.

By Using SSS, you can create more natural-looking organic materials, such as human skin, making 3D avatars more lifelike and expressive in real-time conversation across any language.

Here you can see and real example using the new rendering pipeline to create photorealistic skin materials:

More detailed information at: Subsurface Scattering (SSS) in Evergine

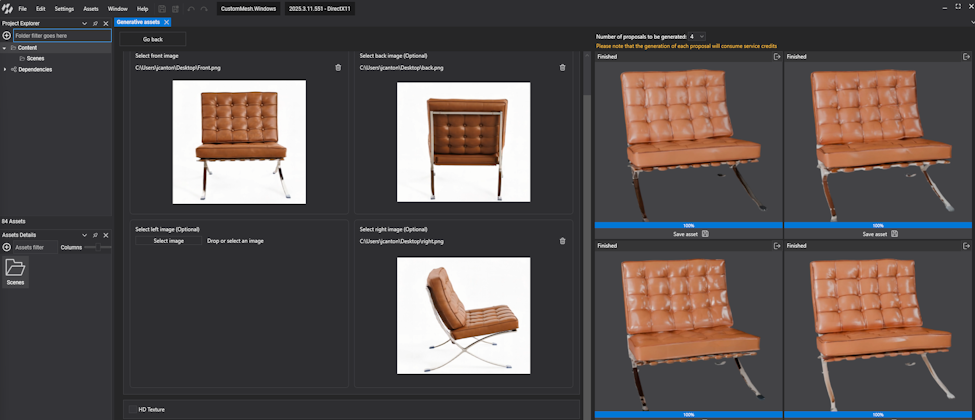

AI assets generation improvements

We continue to improve support for AI-powered asset generation. Now, Evergine supports the creation of 3D models using multi-view images within the Evergine Studio workflow. Users can upload pictures from multiple angles to generate higher-fidelity 3D models.

We have also included high-definition texture generation and support for customized styles to enhance the realism of the final model.

All these 3D assets will be included in the project’s asset content, making it easy to add them to the current scene and check lighting and materials for rapid prototyping.

This generation process is powered by the TripoAi API and its latest model version 2.5. If you want to learn more about how to start using this feature in Evergine Studio, you can find more information at Enhancing AI-Driven 3D Asset Generation

Modularize Standard Effect

In the previous major release, we introduced a new asset called Library Effect, which allows users to write reusable code that can be shared across multiple shaders. Common functions, constants, variables, structs, and directives can be stored in a single library file and used across multiple effects.

In this new release, we have applied this shader architecture to the default Standard Effect, splitting the original single file into multiple libraries: Structures, Common, Material, Lighting, and Shadow.

Creating custom shaders that handle shadows or lighting is now easier than ever in Evergine.

Additionally, we have included a new Lighting Models shader library that supports several popular lighting models, including Phone, Blinn-Phong, Cook-Torrance, and Oren-Nayar.

If you are interested in learning how to start coding new effects using these libraries, you can find more information here: Standard Effect Modularization

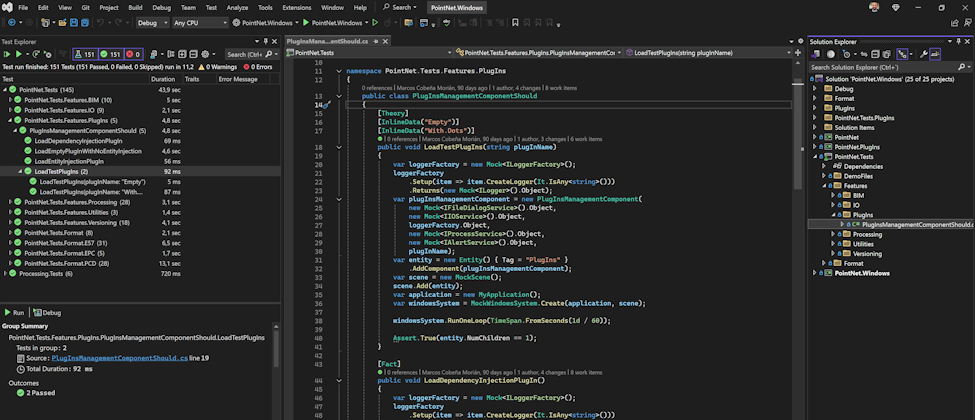

New Evergine Mocks library

Testing is crucial in product development and real industrial projects. However, it can be particularly challenging in 3D graphics development, as these applications rely on GPU-specific code that is often unavailable on build machines.

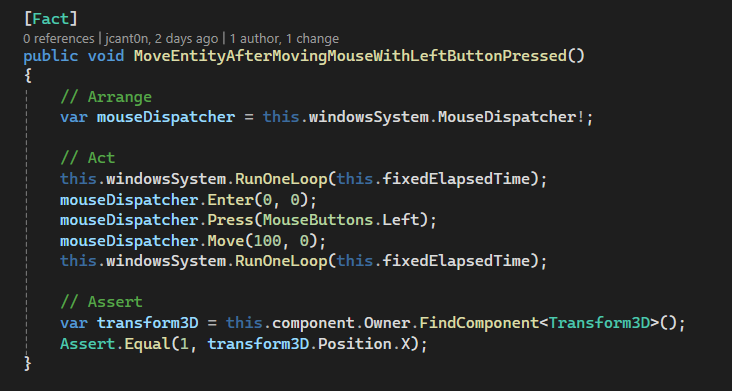

To address this, we are introducing the Evergine.Mocks library in this new release. This official library mocks all low-level objects that interact with the graphics card, enabling users to create unit tests for application logic and run then on machines without a GPU.

Additionally, the library includes helpers to mock the Windows system and input dispatchers, such as mouse, keyboard, and touch. Below is an example of a unit test using those Mocks.

Web Development improvements

We’re excited to introduce two major updates for Evergine Web developers:

- New Serialization converters library:

- Evergine.Serialization.Converters simplifies data exchange between JavaScript and C# in WebAssembly (WASM) projects. It includes built-in JSON converters for Evergine.Mathematics and Evergine.Common types, enabling seamless serialization/desearialization. It support global integration, custom converter and ASP.NET core APIs.

- React Web template improvements:

- We have improved the React web template by replacing Webpack with Vite, significantly reducing startup and reload times for a faster development experience. Additionally, the Evergine canvas is now container-dependent, allowing for greater flexibility in layout design by adapting to its container rather than being tied to the browser window. Lastly, we have updated package dependencies to ensure better performance, security, and compatibility.

These updates enhance development speed, flexibility, and data handling. Visit the next article for more information: Evergine React Template Upgrades and Custom Serialization Support

Future work

Evergine is a dynamic and constantly evolving project, with new features regularly introduced to address emerging industrial challenges. Our commitment to innovation fuels Evergine’s growth, ensuring each release enhances efficiency and productivity across multiple industries.

We are currently exploring the official addition of more industrial file formats, including E57 and PCD for point cloud rendering, USD and USDZ as new model formats used by Apple NVidia and IFC for BIM architecture and energy sectors.

We have been working hard to add support for the Arm64 architecture, allowing users to compile projects on the latest MacOS, Windows, and Linux laptops. Our goal is to complete this task before the next major version, planned for 2025.

Additionally, we are developing new addons, such as a Point Cloud addon that enables the loading and rendering of large-scale point clouds. We have implemented a high-performance progressive rendering system, which we expect to make publicly available within the next release.

We are also continuing to experiment with AI-based models, aiming to integrate them into Evergine Studio to enhance productivity in asset generation.

Furthermore, we have initiated a new Evergine rendering pipeline with a redesigned architecture. This will allow us to unlock new capabilities, maximizing the performance of modern graphics APIs to handle massive amounts of geometry and more.

In the coming months, we will release minor updates focused on bug fixes, while the next major version is scheduled for September – October 2025.

Thank you for supporting Evergine! We remain committed to continuously improving Evergine’s technology to help companies build better products.

We hope you enjoy this new release!